Tired of manually clicking through Proxmox every time you need a new VM? Setting up fresh Ubuntu machines by hand is not just tedious—it’s inefficient and prone to human error, especially when managing multiple environments. In this comprehensive guide, I’ll show you how to automate the entire process using Packer to create reusable Ubuntu 24.04 templates in Proxmox.

Demo Video

The following video demonstrates the complete process of creating an Ubuntu 24.04 template in Proxmox using Packer:

In this demonstration, you can see:

- The Proxmox environment with 12 CPUs and 32GB RAM

- Running the build script to create the template

- The automated installation process via cloud-init

- The final result: a ready-to-use Ubuntu 24.04 template

As shown in the video, the entire process takes about 6 minutes and requires no manual intervention once started.

Introduction to Packer Proxmox Automation

Infrastructure automation has become essential for modern IT operations. Whether you’re running a home lab or managing enterprise infrastructure, manually creating virtual machines is time-consuming and inconsistent. Packer, a HashiCorp tool, solves this problem by providing a standardized way to create machine images across various platforms, including Proxmox.

In this guide, we’ll explore how to use Packer to automate system installations and create Ubuntu 24.04 templates in Proxmox. These templates can then be used to quickly spin up new VMs with consistent configurations, saving time and reducing errors.

What You’ll Learn

- Setting up Packer for Proxmox integration

- Creating an autoinstall configuration for unattended Ubuntu 24.04 installation

- Configuring cloud-init for first-boot customization

- Building a complete template with pre-installed software

- Using the template to deploy new VMs

Prerequisites

Before we begin, ensure you have the following:

- Proxmox Virtual Environment (tested with version 8.4.0)

- Packer installed (v1.12.0 or newer)

- Ubuntu 24.04 LTS ISO (ubuntu-24.04.2-live-server-amd64.iso) uploaded to your Proxmox storage

- Proxmox API token with appropriate permissions

- Basic understanding of Linux command line

Understanding the Project Structure

The code we’ll use is organized as follows:

Proxmox/Packer/

├── build.sh # Main build script

├── ubuntu-2404.pkr.hcl # Packer configuration

├── variables.pkrvars.hcl # Variables configuration

├── secrets.pkrvars.hcl # Sensitive variables (credentials)

└── http/ # Cloud-init configuration

├── meta-data # Instance metadata

└── user-data # User configuration (autoinstall)

Each file plays a crucial role in the automation process. These configuration files work together to define exactly how the template should be created:

- build.sh: Orchestrates the Packer build process

- ubuntu-2404.pkr.hcl: Main configuration defining the VM template

- variables.pkrvars.hcl: Contains configurable settings

- secrets.pkrvars.hcl: Stores sensitive information (API tokens, passwords)

- http/user-data: Contains the autoinstall configuration for unattended installation

- http/meta-data: Provides instance metadata for cloud-init

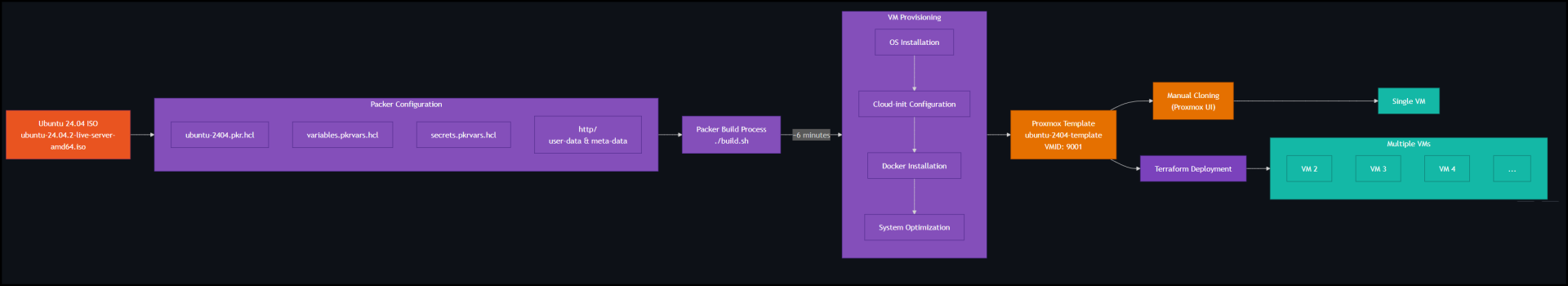

Workflow Diagram

The following diagram illustrates the complete workflow we’ll implement:

As you can see, the process starts with the Ubuntu 24.04 ISO and ends with deployable VMs, with Packer handling all the automation in between.

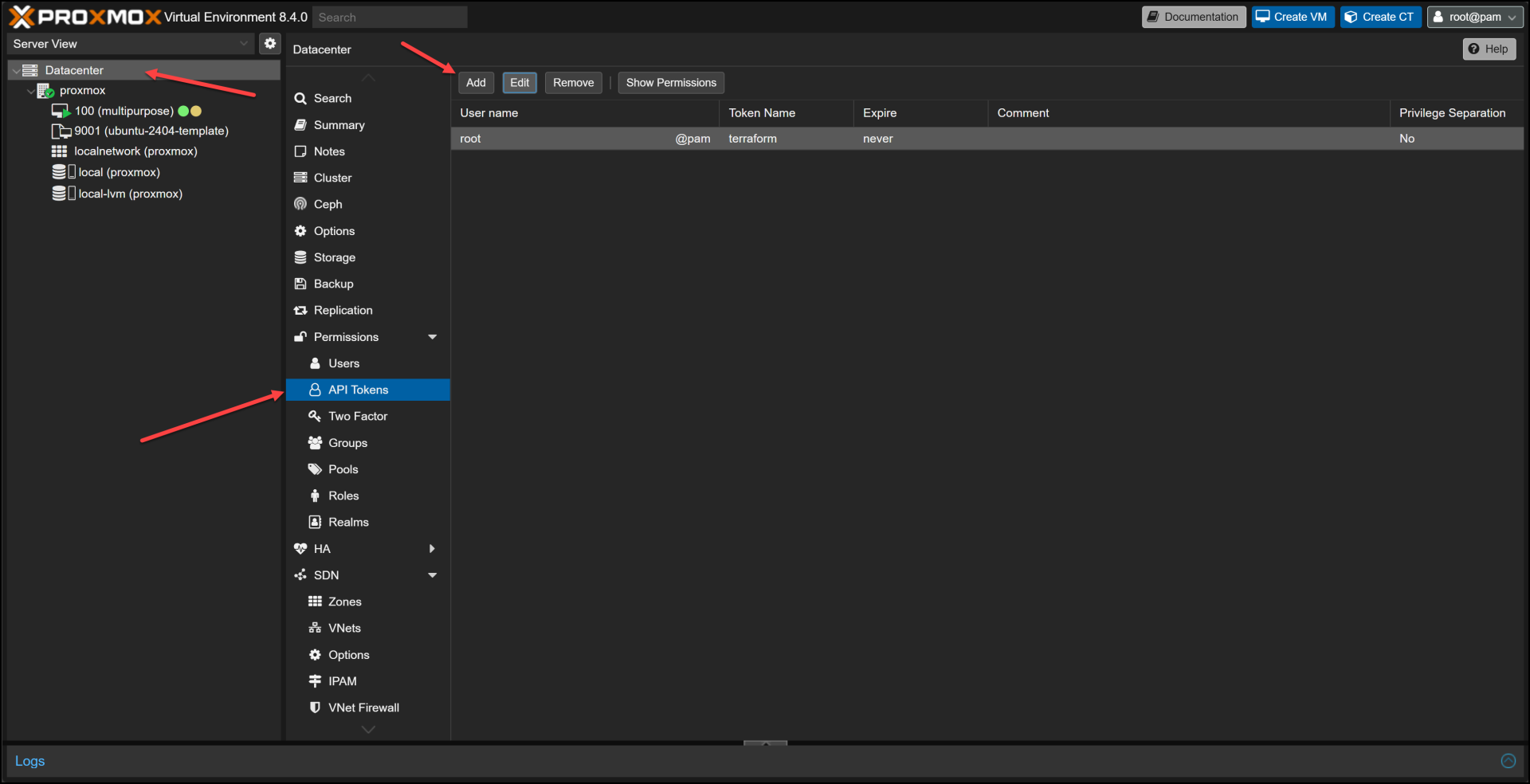

Setting Up Proxmox API Access

Before we can use Packer with Proxmox, we need to set up API access. Proxmox offers token-based authentication, which is more secure than using username/password combinations.

- In the Proxmox web UI, navigate to Datacenter → Permissions → API Tokens

- Click “Add” to create a new token

- Select your user (e.g., root@pam)

- Give the token a descriptive ID (e.g., “terraform“)

- Decide whether to check “Privilege Separation” (for production, this should be checked)

- Click “Add” and make note of both the token ID and secret

This API token will be used in our secrets.pkrvars.hcl file to authenticate with Proxmox.

The Magic of Autoinstall Configuration

One of the key components of automating Ubuntu installation is the “autoinstall” configuration. This replaces older approaches like preseed files or kickstart files with a more modern YAML-based configuration format.

Our autoinstall configuration is stored in the http/user-data file and includes several important sections:

#cloud-config

autoinst

version: 1

locale: en_US

keyboard:

layout: us

identity:

hostname: ubuntu-server

username: ubuntu

password: "$6$rounds=4096$ntlX/dlo6b$HXaLN4RcLIGaEDdQdR2VTYi9pslSeXWL131MqaakqE285Nv0kW9KRontQYivCbycZerUMcjVsuLl2V8bbdadI1"

ssh:

install-server: true

allow-pw: true

ssh_quiet_keygen: true

network:

network:

version: 2

ethernets:

any:

match:

name: en*

dhcp4: true

dhcp6: false

packages:

- qemu-guest-agent

- sudo

- openssh-server

# Additional packages

storage:

layout:

name: direct

swap:

size: 0

# Additional configuration...

This YAML configuration defines everything the Ubuntu installer needs to know:

- System locale and keyboard layout

- User credentials (username, password, SSH settings)

- Network configuration (using DHCP in this case)

- Storage layout (directly formatted disks)

- Packages to install initially

The beauty of this approach is that the entire installation process runs without any human intervention, allowing Packer to proceed directly to the provisioning phase. The system will reboot after the installation process is complete to apply all changes.

Understanding the Packer Configuration

The heart of our automation is the ubuntu-2404.pkr.hcl file. This core configuration file defines the complete template creation process in three main sections:

- Variables – Define parameters for the build

- Source – Configure the VM specifications and installation method

- Build – Define the build steps and provisioning

Key Configuration Elements

Let’s explore some of the most important parts of the configuration:

VM Specifications

# VM System Settings

qemu_agent = true

cores = "2"

memory = "2048"

# VM Hard Disk Settings

scsi_controller = "virtio-scsi-single"

disks {

disk_size = "20G"

format = "raw"

storage_pool = "local-lvm"

type = "scsi"

ssd = true

}

This section defines the basic VM resources – 2 CPU cores, 2GB RAM, and a 20GB disk. These are just for the template; when you deploy actual VMs, you can customize these values.

Cloud-Init Configuration

# VM Cloud-Init Settings

cloud_init = true

cloud_init_storage_pool = "local-lvm"

# Cloud-init config via additional ISO

additional_iso_files {

type = "ide"

index = 1

iso_storage_pool = "local"

unmount = true

keep_cdrom_device = false

cd_files = [

"./http/meta-data",

"./http/user-data"

]

cd_label = "cidata"

}

This crucial section enables cloud-init for the template and specifies that we’ll be providing the configuration via a virtual CD-ROM. Packer will create this CD-ROM with our meta-data and user-data files during the build process.

Boot Configuration

# Explicitly set boot order to prefer scsi0 (installed disk) over ide devices

boot = "order=scsi0;net0;ide0"

# PACKER Boot Commands

boot_wait = "10s"

boot_command = [

"<esc><wait>",

"e<wait>",

"<down><down><down><end>",

" autoinstall quiet ds=nocloud",

"<f10><wait>",

"<wait1m>",

"yes<enter>"

]

This section handles the initial boot process. The boot parameter sets the boot order to prefer the SCSI disk once installed. The boot_command is especially interesting – it simulates keypresses to navigate the GRUB menu and add the autoinstall parameter, telling Ubuntu to use our autoinstall configuration.

Provisioners

provisioner "shell" {

inline = [

"while [ ! -f /var/lib/cloud/instance/boot-finished ]; do echo 'Waiting for cloud-init...'; sleep 1; done",

"sudo systemctl enable qemu-guest-agent",

"sudo systemctl start qemu-guest-agent",

"sudo cloud-init clean",

# Additional commands...

]

}

# Install Docker

provisioner "shell" {

inline = [

"echo 'Installing Docker...'",

# Docker installation commands...

]

}

Provisioners run after the OS is installed. Here, we’re running shell commands to:

- Wait for cloud-init to finish

- Enable and start the QEMU guest agent

- Clean up cloud-init

- Install Docker with a specific version

These provisioners allow us to customize the template beyond the basic OS installation.

The Build Process

Now that we understand the configuration, let’s look at the build process itself, which is handled by our build.sh script:

#!/bin/bash

# Navigate to the directory containing this script

cd "$(dirname "$0")"

# Check if Packer is installed

if ! command -v packer &> /dev/null; then

echo "Packer is not installed. Please install it from https://www.packer.io/downloads"

exit 1

fi

# Check if secrets file exists

if [ ! -f "secrets.pkrvars.hcl" ]; then

echo "Creating example secrets file..."

cp secrets.pkrvars.hcl.example secrets.pkrvars.hcl

echo "Please edit secrets.pkrvars.hcl with your actual credentials before running this script again."

exit 1

fi

# Initialize Packer plugins

echo "Initializing Packer plugins..."

packer init ubuntu-2404.pkr.hcl

# Run Packer build with both variable files

echo "Starting Packer build..."

packer build -force -on-error=ask

-var-file=variables.pkrvars.hcl

-var-file=secrets.pkrvars.hcl

ubuntu-2404.pkr.hcl

echo "Build process completed!"

This script:

- Checks that Packer is installed

- Verifies that the secrets file exists

- Initializes the required Packer plugins

- Runs the Packer build with our variable files

Demo: Watching the Build Process

Let me walk you through what happens when you run the build script. This corresponds to the video demonstration provided.

When you run ./build.sh in the Proxmox/Packer directory, the following sequence unfolds:

- Initialization: Packer checks and installs required plugins

- VM Creation: Packer instructs Proxmox to create a new VM with ID 9001

- ISO Mounting: Proxmox attaches the Ubuntu 24.04 ISO and the cloud-init ISO

- Installation: The Ubuntu installer runs with our autoinstall configuration

- Provisioning: After installation, Packer connects via SSH and runs our provisioners

- Template Conversion: Finally, the VM is converted to a template

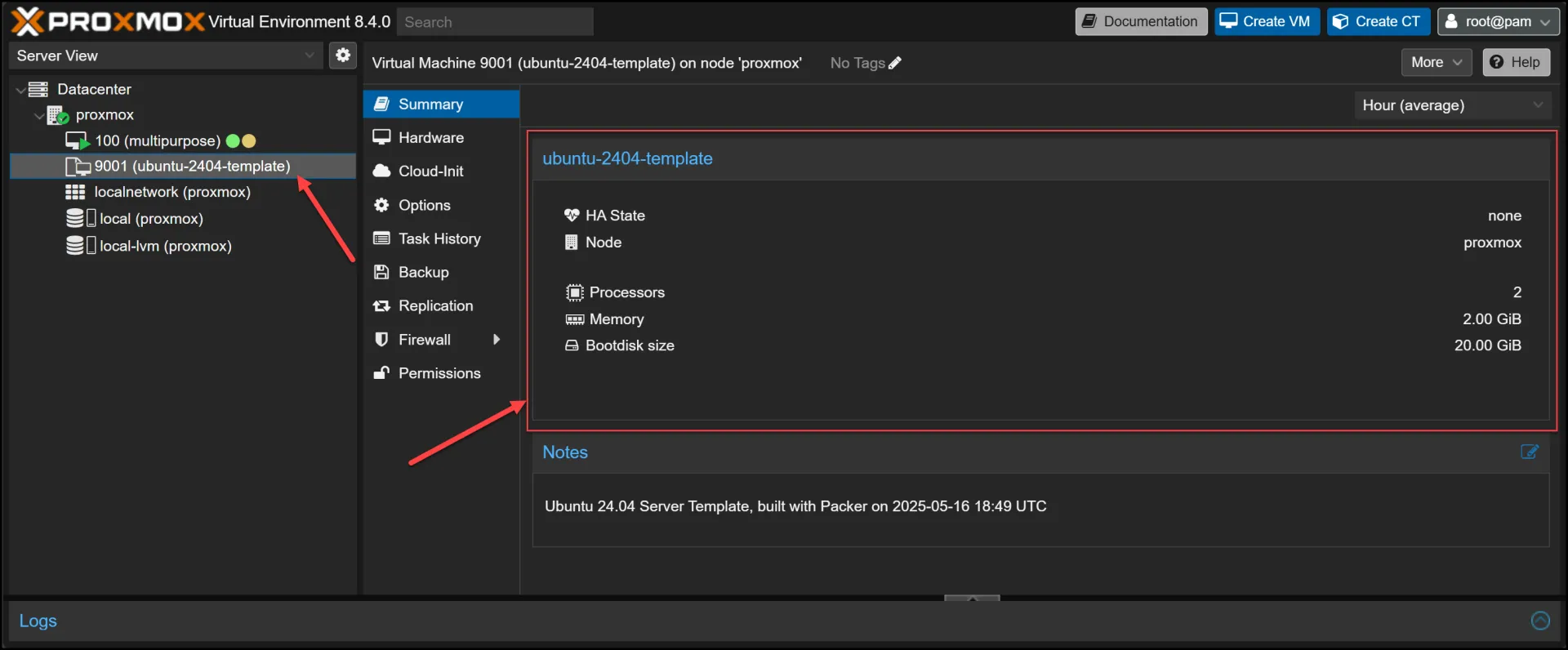

The whole build process took me 6 minutes and 2 seconds! Now we have a template we can use to create VMs from easily and quickly.

In the Proxmox UI, you’ll see a new VM appear, and in the console, you can watch the installation progress. Once complete, the VM is converted to a template with ID 9001 and name “ubuntu-2404-template”.

The entire process takes about 6 minutes, after which you have a ready-to-use template with:

- Ubuntu 24.04 LTS installed

- Docker pre-installed (version 27.5.1)

- Cloud-init configured for customization on first boot

- QEMU guest agent enabled for better integration with Proxmox

Key Technical Components

Let’s dive deeper into some of the key technical components that make this automation possible:

Cloud-Init Integration

Cloud-init is a standard for initializing cloud instances. In our case, we’re using it to customize the VM on first boot. This works through two key files:

- meta-data: Contains basic instance metadata like instance-id and hostname

- user-data: Contains the actual configuration in cloud-config format

For our template, we’re using cloud-init in two ways:

- For the initial installation (via autoinstall)

- For subsequent VM customization when cloning the template

This dual approach ensures both unattended installation and flexible customization for deployed VMs.

Boot Command Magic

The boot command in our Packer configuration deserves special attention:

boot_command = [

"<esc><wait>",

"e<wait>",

"<down><down><down><end>",

" autoinstall quiet ds=nocloud",

"<f10><wait>",

"<wait1m>",

"yes<enter>"

]

This simulates keypresses to:

- Press ESC to access the GRUB menu

- Press ‘e’ to edit the boot entry

- Navigate to the end of the kernel command line

- Add autoinstall quiet ds=nocloud to enable autoinstall

- Press F10 to boot with these options

- Wait for the installer to start

- Respond “yes” to the partitioning prompt

This sequence is crucial for enabling unattended installation and can be one of the trickiest parts to get right, as it depends on the specific Ubuntu version and installer.

Docker Pre-installation

Our configuration pre-installs Docker, which is a common requirement for many environments:

provisioner "shell" {

inline = [

"echo 'Installing Docker...'",

# Add Docker's official GPG key

"sudo apt-get update",

"sudo apt-get install -y ca-certificates curl gnupg",

# Docker installation steps...

]

}

We’re following Docker’s official installation instructions and pinning to a specific version (27.5.1) for consistency. This approach ensures that every VM created from the template has identical Docker installations.

Troubleshooting Common Issues

Automating system installations can sometimes lead to unexpected issues. When working with Packer and Proxmox, you might encounter some of these common problems listed below.

But first, it’s always a good idea to check the log file located at /var/log/installer/autoinstall-user-data can be crucial for troubleshooting. This file is generated after an Ubuntu installation and can help identify and resolve issues related to the autoinstall configuration.

Below are some common issues you may encounter:

Boot Command Timing Issues

The boot command relies on precise timing, which can vary depending on your hardware. If you encounter issues with the installer not receiving the autoinstall parameter, try adjusting the <wait> times in the boot command.

CD-ROM Ejection Issues

One common issue is the failure to properly eject the CD-ROM after installation. Our configuration addresses this in two ways:

- Setting the correct boot order: boot = “order=scsi0;net0;ide0”

- Adding explicit ejection commands in the final provisioned – although these didn’t work for me, and the boot order above is what allowed the installation to proceed successfully.

Cloud-Init Cleanup

For the template to work properly, cloud-init needs to be cleaned before conversion:

sudo cloud-init clean --logs

This ensures that when VMs are created from the template, cloud-init runs fresh and applies the new configuration correctly.

Next Steps: Using Your Template

Now that you have a reusable template, what’s next? Here are some ways to use it:

Consider using the autoinstall format for performing unattended and reproducible system installations, especially in corporate environments where replicable OS installations are crucial.

Manual VM Creation

You can manually create VMs from the template through the Proxmox UI:

- Go to the template in the Proxmox UI

- Click “Clone”

- Choose “Full Clone” or “Linked Clone”

- Configure the new VM resources

- Start the VM

Terraform Integration

For true automation, use Terraform to deploy VMs from your template, we have a whole separate blog post talking about that, but below is a quick config sample:

resource "proxmox_vm_qemu" "ubuntu_vm" {

name = "ubuntu-server"

target_node = "proxmox"

clone = "ubuntu-2404-template"

cores = 4

memory = 8192

# Additional configuration...

}

This allows you to define your infrastructure as code and deploy consistent VMs with a single command.

Conclusion

Creating automated templates with Packer and Proxmox is a game-changer for VM deployment. By investing a little time in setting up this automation, you’ll save countless hours of repetitive work and ensure consistency across your virtual infrastructure.

The approach we’ve covered uses modern technologies like cloud-init and autoinstall to create a flexible, customizable template system that can adapt to various needs while maintaining standardization.

Whether you’re running a home lab or managing enterprise infrastructure, this automation approach will streamline your workflow and reduce errors. No more clicking through installation wizards or manually configuring each new VM!

In the next article, we’ll explore how to use Terraform to deploy VMs from this template, completing the automation pipeline from template creation to VM deployment.

Additional Resources

- Terraform Proxmox Ubuntu 24.04

- Packer Documentation

- Proxmox VE

- Cloud-Init Documentation

- Ubuntu Autoinstall Documentation

By mastering these tools and techniques, you’ll be well on your way to a fully automated VM deployment workflow that saves time, reduces errors, and enhances consistency across your infrastructure.

Code

Hi and Welcome!

Join the Newsletter and get FREE access to all my Source Code along with a couple of gifts.